Many attempts at creating effective user experiences with artificial intelligence (AI) tend to produce news worthy results but not so much in terms of upping mainstream adoption.

Routine AI use for most consumers is still not an everyday thing (broadly speaking anyway, it may not be so if you look at certain demographic groups and interest groups such as tech enthusiasts). No one’s as dependent on AI assistants in the same way as we are so dependent on, say, Word processors or Google Search or mobile devices.

Intelligent assistants are still a novelty, and that may stem from the problem of their design. Most people don’t want to converse with machines on philosophical matters (though it ought to be cool), they just need to make a reference. People don’t want virtual girls in holographic displays asking them how their day went after coming home from work. And people seem to naturally want to troll chatbots in an attempt to explore the limits of their “intelligence”, and perhaps have a laugh while at it.

In fact, the more we attempt to define AI use in the context of human specific activities, the less it is used. It is perhaps a result of our bias towards emulating the all-knowing, all-powerful and very personal/witty AI found in films like Her or in sci-fi games like Halo. This kind of cultural hinting naturally leads many people to believe that, because the AI is being presented in a very human-like fashion, then it should act like a human. The fact of the matter is that, in almost all cases, you’re probably going to see through the facade before 10mins of continuous use have elapsed.

AI simply isn’t human, and designers shouldn’t help us pretend that they are.

Instead of trying to approach the question of AI usage when designing interfaces purely from the perspective of our sci-fi fantasies (who knows the future anyway?), we could perhaps start with the user; what do they actually need? They probably might not need an AI with a built-in personality.

They might instead need an AI that can do small but useful stuff efficiently and reliably. Successful intelligent agents that do just that usually lack any form of personality or similar bells and whistles.

Take Google’s strategy; Google’s assistant and intelligent products haven’t a hint of personality besides the voice used for their voice search functionality. This cuts out a lot of cultural and psychological baggage from the conversation. Since the product clearly appears as a machine, people will not lead themselves to believe that their assistants can do human-like stuff (sparing the occasional witty answers and trivia). This can be beneficial because the user isn’t distracted by the product’s attempts at sounding human. The user is instead empowered without him/her even realising it. It just works.

I used to think building an assistant as cold and impersonal as Google Now would be a bad move, but I can now see the logic when comparing Google Now to the competition. Cortana looks so lively, and yet she disappoints me precisely because you keep looking for the human-likeness as it all but disappears with continued usage. Ditto Siri. I get so distracted by their apparent witty personality that I can’t seem to get at their actual functionality. Why do I need a witty robot in the house?

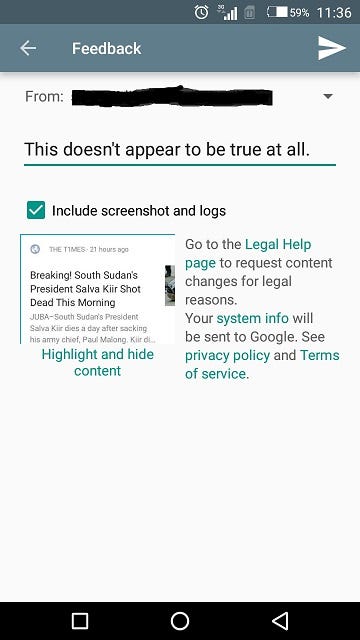

By far, the most interesting thing that Google has ever added to it’s Google Now app was no less impersonal, but it was extremely useful IMO. Now on Tap is able to recognise elements displayed at anytime on a phone, allowing a search to be done without leaving any given opened app. If it doesn’t detect what you want, you can simply highlight it and it would search for that. It is a perfect design; minimalistic, useful and brutally impersonal. It just works.

Intelligent apps shouldn’t be built to seem explicitly human; they should be built to get some actual work done. And they should do it without distracting. I know this might not be the kind of interesting personal robot some of us would have imagined, but it is probably the only way to make things less awkward between man and machine. The uncanny valley is not a place that you’d like your app to end up in, certainly not the rest of the consumer AI market and industry.

AI is still an emerging technology, like the Internet before it went mainstream. We are still trying to understand how to integrate the technology into the normal, everyday workflow of average human beings. Human-AI UX design choices may prove to be what makes or breaks consumer AI applications. The potential rewards for successfully breaking the design problem are tantalising; designers must keep seeking the perfect problem-solution fits that real people might care about and design around that.